Technology

AIME

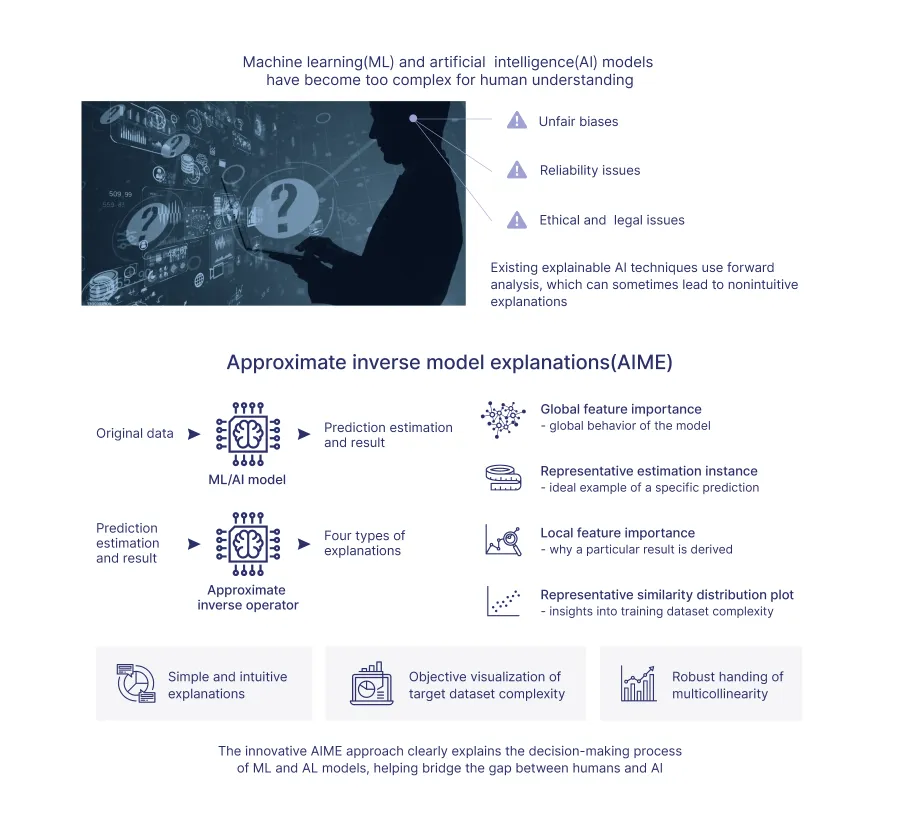

Proprietary technology to derive simple, easy-to-understand explanations for AI-derived estimation results

(U.S. preliminary patent application filed, Japanese patent application filed, applications in preparation in the U.S., Europe, and China)

I think the reason you found it difficult to use including generative AI is because there is no explanation as to why the AI came up with the answer it did. Even human children, if you do something wrong and pay attention and ask them why, they will answer why after the fact. In fact, today’s AI is often a “black box” that is too complex to understand, even for the humans who developed it.

Can we really use AI, a “black box,” to do truly responsible work? For example, when a doctor uses AI to assist in diagnosis, if he cannot explain why the AI pointed out the risk, he cannot use it. Sometimes the interviewer is an AI. However, if the AI interviewer cannot explain why he/she rejected Mr. A and passed Mr. B, it can be said to be unfair. Furthermore, in the future, when self-driving cars become widespread and accidents occur, if the AI cannot explain the reasons for its decisions in order to understand the cause, it will be difficult to determine who, what, and how at-fault is at fault, and even determining insurance premiums will become a difficult matter.

Can we really use AI, a “black box,” to do truly responsible work? For example, when a doctor uses AI to assist in diagnosis, if he cannot explain why the AI pointed out the risk, he cannot use it. Sometimes the interviewer is an AI. However, if the AI interviewer cannot explain why he/she rejected Mr. A and passed Mr. B, it can be said to be unfair. Furthermore, in the future, when self-driving cars become widespread and accidents occur, if the AI cannot explain the reasons for its decisions in order to understand the cause, it will be difficult to determine who, what, and how at-fault is at fault, and even determining insurance premiums will become a difficult matter.

In order for us humans to evolve more, AI needs to be more human-friendly, fair, and transparent in its explanations. Approximate Inverse Model Explanations-AIME makes this possible.

AIME derives an explanation for the AI’s estimated results in the same way that a human would perform backwards arithmetic operations when solving a difficult math problem (see here for specific basic ideas).

AIME is a technology that ensures fairness, accountability, and transparency, which will be necessary for businesses to provide some kind of AI-based product or service in the future.

Approximate Inverse Model Explanations for Intuitive Machine Learning Model Predictions